Target Attention Is All You Need: Modeling Extremely Long User Action Sequences in Recommender Systems

A deep-dive into Kuaishou's TWIN architecture

Recommender systems try to predict your next action, so it may not be surprising that the most important signals going into such models are user action sequences. The best recommender systems today are consequently those that can efficiently summarize even extremely long user action sequences without loss of important information — much like near-lossless compression algorithms.

In a previous issue, we’ve discussed some of these model architectures, including

DIN, which uses target attention to summarize long user histories into a single embedding per candidate item.

SIM, which first filters user histories using topic matching with respect to the target to be ranked (aka Hard Search) and then passes these filtered histories into target attention. In SIM nomenclature, we also call these two stage general search unit (GSU) and exact search unit (ESU), respectively.

ETA, which creates binary hash signatures for all items in the user history and then uses Hamming distance to the target as a proxy for relevance: the smaller the Hamming distance, the more relevant the item. The trick is to use a hashing algorithm that is locality-sensitive, such that similar vectors end up with similar hash signatures. ETA uses SimHash.

SDIM, which uses hash collision directly as a proxy for relevance in GSU, that is, it simply retrieves all items from the user history that collide with the target in hash space. Again, this only works if the hashing algorithm is locality sensitive.

Alas, if we want to model user action sequences that are life-long, none of these algorithms are sufficient, at least that’s what a pair of papers from Kuaishou (a short-form video streaming app) claims. Their recent papers TWIN (Chang et al 2023) and TWIN-v2 (Si et al 2024) outline a framework to model user action sequences beyond lengths of 10^5, 2 orders of magnitude greater than the best competing algorithms so far, setting a new SoTA bar for ranking models:

Let’s take a look at how they work.

TWIN (2023)

Unlike SIM, ETA, and SDIM, TWIN proposed to use the same target attention in both the ESU as well as in the GSU. At first glance, this may seem counter-intuitive. After all, the purpose of the GSU is to replace the ESU’s target attention with some operation that is computationally cheaper. The trick is that TWIN uses a simplified implementation of TA that reduces its computational complexity.

Simplified Target Attention

In particular, we first write all item embeddings inside the user action sequence — i.e. the “keys” in the target attention — as

where

the first term (h) is an embedding that encodes inherent properties of the item itself, such as the video id, the video topic, author, video duration, etc, and

the second term (c) is another embedding that encodes cross features between the item and the user such as the click timestamp, watch time, user rating, etc.

In other words, the first embedding needs to be computed once per candidate (which is relatively cheap), and the second one once per candidate per user (which is relatively expensive).

Then (leaving out some projection matrices for simplicity), we can approximate the target attention scores as

where

k and q are the embedding representations of the key (user history item) and query (target item to be ranked), respectively,

(k_h dot q) is the part of the target attention due to inherent item properties, i.e. independent of user behavior,

(k_c W_c) is a 1-dimensional projection of k_c, i.e. a bias term reflecting the information contained in the user actions, and

beta is a learnable parameter that controls the degree to which this bias term contributes to the attention score.

The beauty of this reformulation is that it allows us to leverage pre-computed, high-dimensional item embeddings to compute the first term, combined with dynamic, low-dimensional cross feature embeddings to learn the second term. This refactoring into high and low dimensions makes sense because the inherent features such as item ids have much higher cardinality than cross features such as watch time, and hence need more dimensions to be represented. In TWIN, the authors choose dimensions of 64 and 8 for the “H” and “C” embeddings, respectively.

In addition, since the dot products between k_h and q are user-independent, they can be cached and re-used when ranking the same item for multiple users.

A simplified example

For illustration purposes only, suppose we had a model that learns from just 2 features, item ids and user watch time histories. Then, the entire TWIN simplified TA computation would work as follows:

For the candidate item id (query), as well as of the item ids inside the user action sequence (keys), we look up the corresponding embeddings from a dedicated “embedding server”.

We compute the dot product between query and key embeddings. This is the “H” component of the attention score, i.e. the component from inherent item properties. This value can be cached and re-used.

For the watch time feature, we first map it into an id (for example, using binning), and then map that id into an embedding using a low-dimensional embedding table. We then map this embedding into a 1-dimensional bias term with a projection matrix. In this particular example (watch times), that projection matrix may learn to associate small watch times with negative scores and large watch times with positive ones.

Finally, we add the inherent TA score (reflecting the contribution inherent to the item) and the bias term (reflecting the contribution inherent to the user/item interaction) to compute the final TA score.

In this particular example, the third item in the user action sequence would have a score of

0.65 = 0.81 (inherent attention) - 0.16 (bias term from cross features).TWIN’s GSU would then rank all items in the user action sequence by their attention scores and pass the top-k to the ESU to compute the pooled embedding of the user filtered history with attention weights. The k value introduces a trade-off between compute and predictive accuracy: the larger k, the more items we pass into the ESU, resulting in higher recall but also larger computational cost from the ESU’s target attention pooling operation. TWIN uses k=100.

GSU performance: almost an oracle

Typically, at this point, it would be standard practice to measure a proxy for the model’s predictive performance (accuracy, log-loss, etc) as a function of various hyperparameters. However, if we just did that, we would not get a good understanding of TWIN’s two components, GSU and ESU: if the model performance is poor, is it due to the GSU or the ESU?

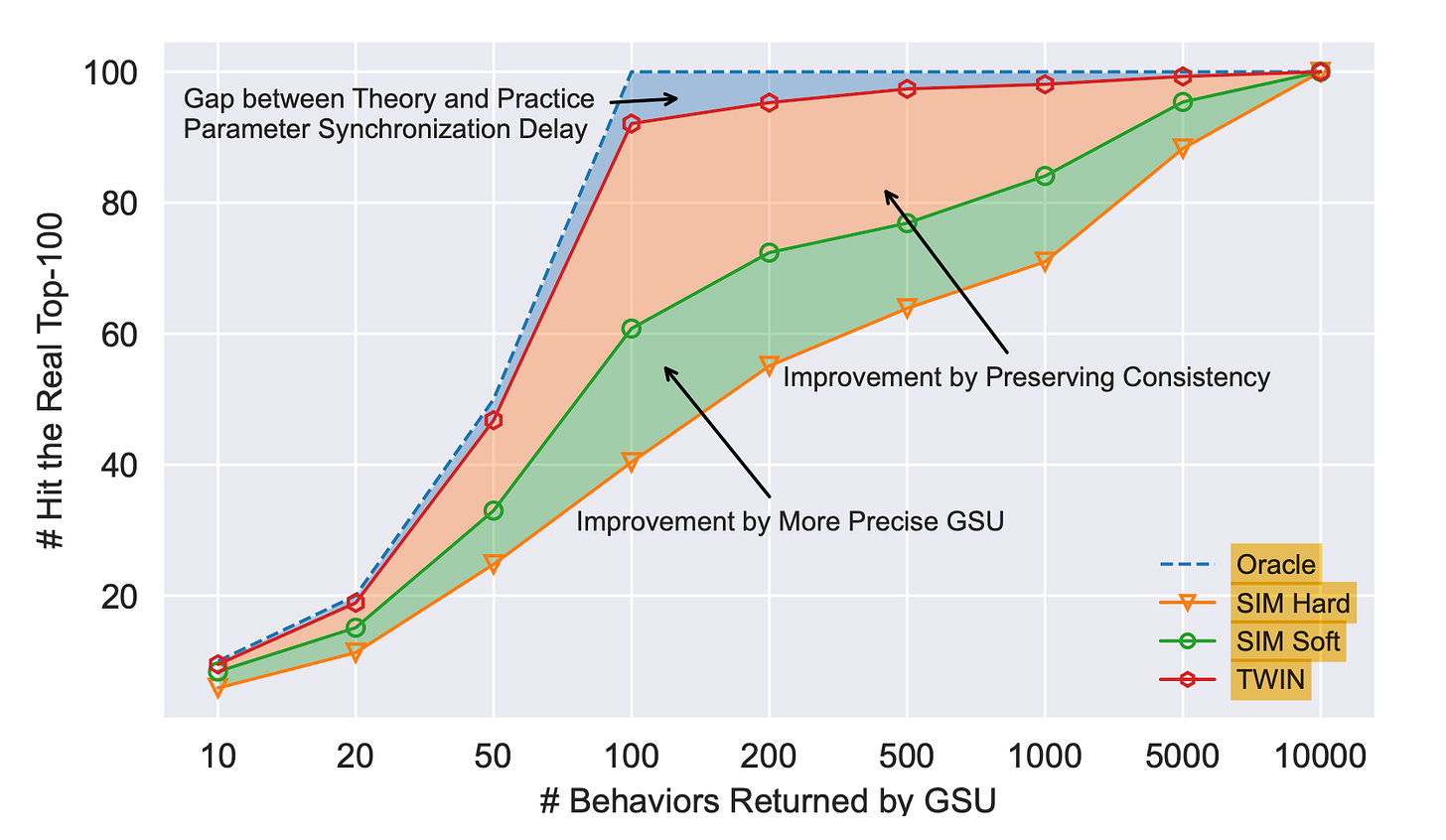

A clever way to measure the GSU’s performance is to simply measure its “hit rate” as a function of k. Here, hit rate is the number of items returned by the GSU that are in the top 100 items identified by exact TA. Hence, the closer this value is to 100, the more indistinguishable the GSU’s approximate TA from exact TA. The best-performing GSU — the “oracle” — would then by definition be TA itself.

And indeed, TWIN’s GSU (red curve in the figure) performs very close to the oracle (blue curve), far outperforming both SIM Hard (orange curve) and SIM Soft (green curve). The only remaining difference between TWIN’s GSU and the oracle, e.g. ~10% at k=100, is due to the shortcuts taken in the TA calculation. Not bad!

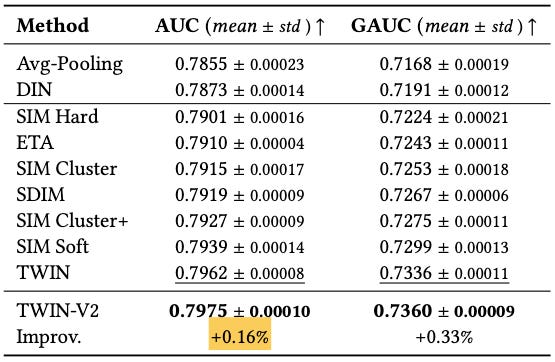

TWIN performance

In offline experiments, the authors measure a +0.29% improvement in AUC from TWIN compared with the closest competitor, SIM Soft. This gain is considered significant: the authors explain that everything above 0.05% is usually enough to bring online gains. And indeed, in online A/B tests, the authors measure improvements in total watch time of 1.4% to 2.8%, depending on the recommendation surface, compared with SIM Soft.

Where exactly does this gain come from? After all, SIM Soft also uses an approximation for TA, namely approximate MIPS (maximum inner product search). The answer is that the gain appears to come predominantly from the bias term from cross features in TWIN, which is not modeled in SIM: in an ablation experiment, the authors found that dropping this bias term would result in 0.2% AUC loss!

TWIN-v2 (2024)

TWIN is not the end of the story though. Simply put, it’s not good enough, at least not for Kuaishou’s use-case: it can handle user action sequences up to 100K, but the most active Kuaishou users watch 3X that amount in a single year!

It is therefore perhaps no surprise that Kuaishou came back this year announcing a new model which improves upon TWIN with a new design choice allowing the model to go further back in time and learn from more user history than any of its predecessors. Enter TWIN-v2.

Hierarchical clustering

At a very high level, TWIN-v2 is identical to TWIN (including GSU and ESU) with the exception that it also includes an offline clustering component with the purpose of pre-aggregating user histories, effectively reducing their lengths by an order of magnitude.

This pre-aggregation is achieved using a hierarchical clustering algorithm, where we keep clustering the cluster members using k-means until each leaf cluster has no more than γ items in it. TWIN-v2 uses γ=20, which in practice results in an average cluster size of around 10 items per cluster. In other words, by using item clusters instead of items directly, we’re able to reduce item cardinality, and hence sequence length, by around an order of magnitude!

Cluster features and cluster-aware TA

Which opens up the question of how we assign features to the item clusters, given the features of its members. This is done as follows:

for numerical features, we simply average the feature values of all cluster members.

for categorical features, where averaging doesn’t make much sense, we instead assign the cluster the feature value of the member that’s nearest to the cluster centroid.

Thus, each cluster ends up with features that somewhat resemble its members, and those are the features used in TWIN’s GSU and ESU modules.

Another important implementation detail is that TWIN-v2 rescales the target attention scores as

α <-- α + log(N)where N is the cluster size. This rescaling ensures that larger clusters get larger target attention scores. The authors call this “cluster-aware target attention”, and show with ablation experiment that this re-scaling accounts for around 0.1% AUC in TWIN-v2.

TWIN-v2 performance

The rest of TWIN-v2 follows closely the design of TWIN-v1, i.e. we use a GSU with approximate TA that filters the clustered histories down to 100 items, followed by an ESU with that re-uses the same TA scores and computes the pooled embeddings with target-attention weights.

In offline experiments, the authors show a 0.16% AUC improvement from TWIN-v2 over TWIN-v1, and online improvements of 0.67% - 0.8% in watch time, depending on the recommendation surface.

Summary

Unlike their predecessors, the TWIN family of ranking models leverages target attention in both the GSU and the ESU. The trick making this work is to decompose the keys into two components, the “H” component reflecting inherent item properties and the “C”component reflecting user/item cross features. With this reformulation, we can then save compute by caching the target attention scores coming from the H components, and approximating the scores coming from the C component as a simple 1D bias term.

TWIN-v2 adds an additional clustering module that first aggregates all video ids into video clusters before passing these clusters into TWIN. The trick here is to make the clustering hierarchical and keep clustering the cluster members until each cluster size is no more than a predetermined threshold. Practically, the authors achieve an average cluster size of 10, hence reducing the cardinality and thus sequence lengths by around an order of magnitude. In Kuaishou’s case, this appears to be exactly the shrinkage needed in order to be able to model life-long user action sequences (at least for now), given that the most active power users watch hundreds of thousands of videos in a year.

Lastly, it is very interesting to see how Kuaishou goes against the LLM-inspired trend of using self-attention and Transformer-like architectures in recommendation. Instead — so the conclusion from this work — target attention, along with some clever optimization tricks, is really all you need. It will be interesting to see how this domain develops over the coming years, and whether self-attention or target-attention (or a combination of both) will win the race for the best recommendations.