Demystifying Mixtral of Experts

Mistral AI's open-source Mixtral 8x7B model made a lot of waves - here's what's under the hood

Mixtral 8x7B, Mistral AI’s new sparse Mixtures of Experts LLM, recently made a lot of waves, with dramatic headlines such as:

Mistral AI Introduces Mixtral 8x7B: a Sparse Mixture of Experts (SMoE) Language Model Transforming Machine Learning

Mixtral 8x7B: Elevating Language Modeling with Expert Architecture

What is Mixtral 8x7B? The open LLM giving GPT-3.5 a run for its money

Mistral AI’s Mixtral 8x7B surpasses GPT-3.5, shaking up the AI world

Mistral AI is a French AI company founded in 2023 by former engineers from Meta and Google. The company released Mixtral 8x7B - in what was perhaps the most unceremonious release in the history of LLMs - by simply dumping the Torrent magnet link on their Twitter account on December 8th, 2023,

sparking numerous memes about Mistral’s unconventional way to release models, such as the following:

“Mixtral of Experts” (Jiang et al 2024), the accompanying research paper, was published about a month later, on January 8th of this year, on Arxiv. Let’s take a look, and see if the hype is warranted.

But first, for context, a little bit of history.

Sparse MoE in LLMs: a brief history

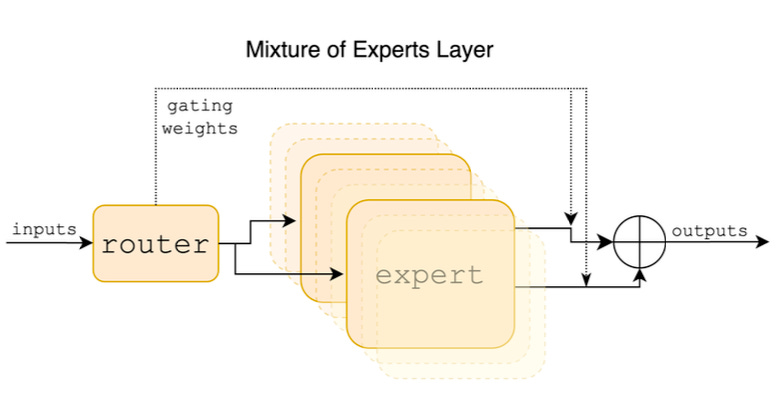

Mixtures of Experts (MoE) models trace back to research from the early 90s (Jacobs et al 1991). The idea is to model a prediction y using the weighted sum of experts E, where the weights are determined by a gating network G. It’s a way to divide a large and complex problem into distinct and smaller sub-problems. Divide and conquer, if you will. For example, in the original work, the authors showed how different experts learn to specialize in different decision boundaries in a vowel discrimination problem.

However, what really made MoE fly was top-k routing, an idea first introduced in the 2017 paper “Outrageously large neural networks” (Shazeer et al 2017). The key idea is to compute the output of just the top k experts instead of all of them, which allows us to keep FLOPs constant even when adding an outrageous number of experts. In the paper, the authors use top-k routing, combined with additional tricks such as load balancing losses, customized parallelism, and gating noise, to build a massive (at the time) 6B parameter LSTM language model, achieving unprecedented performance on the Billion Words language modeling benchmark.

The next important milestone was the Switch Transformer (Fedus et al 2022), which took top-k routing to its extreme limit by choosing k=1, that is, picking just the single, most relevant, expert per input token, and disproving the long-held assumption that the gate needs to be able to compare at least 2 experts to learn anything.

Today, we call the case of k=1 also “hard routing”, in contrast to the soft routing in standard MoE models. By replacing the FFN layers in the T5 Transformer with 128 hard-routed experts, as well as introducing additional modeling tricks such as the capacity factor, a new load balancing loss, precision casting, scaled expert initialization, aggressive expert dropout, and customized parallelism, the authors achieves pre-training speed-ups of 7X and achieved unprecedented performance on downstream fine-tuning tasks such as GLUE.

Sparse LLMs were born.

Mixtral of Experts architecture

Mixtral 8x7B is a 32-block Transformer model where we replace the FFN layer in each Transformer block with 8 experts that use top-k routing with k=2. The experts themselves are 1-layer MLPs with SwiGLU activation. Formally, the MoE layer produces an output y that’s given by:

where n is the number of experts (here n=8), W is the routing matrix which assigns inputs x to experts, Top2 is a function that returns negative infinity for all but the the 2 largest inputs.

This creates a model with a total of 47B parameters, but only 13B parameters that are active at any given time due to the top-2 routing, resulting in a large-capacity model that trains as fast as a small-capacity model.

Benchmark results

The authors evaluate Mixtral 8x7B on benchmark problems from 6 different domains: MMLU (massive multi-task language understanding), knowledge retrieval, reasoning, comprehension, Math, and coding. They compare against Mistral-7B, their 7B parameter dense Transformer model, as well as Llama-7B, Llama-13B, and Llama-70B.

Keep reading with a 7-day free trial

Subscribe to Machine Learning Frontiers to keep reading this post and get 7 days of free access to the full post archives.